Here’s the short version: If you calculate data in almost any programming language, very large and very small numbers will be printed using a near-universal standard called scientific notation. If you read this data into Mathematica, a system designed for scientific and engineering mathematics, it quietly replaces each one of those numbers with a different, essentially random value. Thus everything you do with your data from that point is untrustworthy. This is an inexcusable and potentially dangerous situation.

Here’s the longer version: I’ve been using Mathematica since I was at Xerox PARC in the early 1980’s. Mathematica is basically a symbolic and numerical mathematics system designed for engineers and scientists.

Scientific notation (also called “standard form”) is a time-honored way to write numbers that are very large or very small. Set a floating-point variable to a small number, like 0.000000000098, and print it. In virtually every programming language on the planet, from the great-grand-daddy of them all, FORTRAN, to the youngest kid on the block, Swift, you’ll get the same output: 9.8E-11 (some languages print a lower-case e). This way of expressing scientific notation using plain text is about as standard as putting a car’s accelerator pedal on the right and the brake on the left. It’s just about universal.

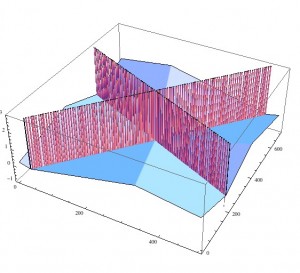

Today I wanted to create height-field plots for some 2D data I’d computed in Processing (a variant of Java). The values were all in the range [0,2]. I thought I’d use Mathematica’s nice plotting tools to create the figures. So I wrote out my data from Processing in the standard way, just calling println(), along with all the braces, commas, and other syntax Mathematica needed to create a 2D array. Here was the result:

Those vertical spikes are wrong. They definitely do not belong. And they all seem to be lined up where the value of the plot is about zero. The plot only shows the values up to 3, but I asked Mathematica for the largest value in the data, and it told me it was just under 23. This made no sense to me; I was sure my data never went above 2. So I added a test and print statement in my Processing code so any values greater than 2 would be printed out to the console. It never printed anything. Yet Mathematica kept insisting there were lots of values in the 20’s in there. Even more mysteriously, Mathematica was telling me my smallest value was -0.8, when I knew my data was always 0 or positive.

Those vertical spikes are wrong. They definitely do not belong. And they all seem to be lined up where the value of the plot is about zero. The plot only shows the values up to 3, but I asked Mathematica for the largest value in the data, and it told me it was just under 23. This made no sense to me; I was sure my data never went above 2. So I added a test and print statement in my Processing code so any values greater than 2 would be printed out to the console. It never printed anything. Yet Mathematica kept insisting there were lots of values in the 20’s in there. Even more mysteriously, Mathematica was telling me my smallest value was -0.8, when I knew my data was always 0 or positive.

After over an hour, I found the problem. Mathematica does not know how to read scientific notation. Try opening a new Mathematica notebook (I have Version 9), type 9.8E-11, and hit enter. The value you get back? 15.6392. Not 0.000000000098. It’s not even close. What is going on?

The answer has a few parts. First, Mathematica uses the symbol E for Euler’s constant (about 2.718). Second, Mathematica allows implied multiplication, so 2x means 2*x. Finally, and here’s the kicker: Mathematica thinks numbers in scientific notation are bits of arithmetic to evaluate. Yes, that’s right: a program whose entire intended audience is people who work professionally with numbers and scientific notation, will not read that notation properly. That’s bad enough, but here comes the worst part: It doesn’t even tell you.

When Mathematica sees 9.8E-11, it reads this as (9.8*E)-11, which is about 15.6. It doesn’t warn you that it’s taking your standard notation, turning it into a little piece of arithmetic, computing the result, and using that value. Instead, it just replaces the value you clearly specified with an essentially random (and, in this case, vastly bigger) number.

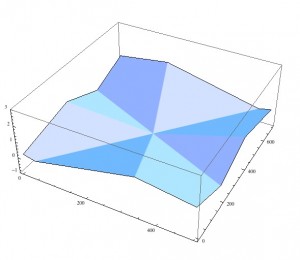

I went back to my Processing code and changed the print statement to something that wouldn’t use scientific notation, and I got this plot:

Much better. It was easy to make the change, but I wasted an hour figuring this out.

Much better. It was easy to make the change, but I wasted an hour figuring this out.

This is absurd. I know that some (perhaps even most) other languages don’t read scientific notation properly either, but they have the good grace to complain about it (they usually stumble on interpreting 9.8E and report an error). But Mathematica just goes ahead and computes a value for you. No warning, no nothing. In its defense, the documentation for Mathematica describes their own scientific notation-ish input format that works properly: our example would be written 9.8*^-11. But this idiosyncratic syntax doesn’t change the fact that if you enter a number in standard scientific notation, it is not interpreted that way, and it doesn’t even warn you. So you’d have no reason to search their documentation to discover this unusual format.

I can’t imagine that the authors of Mathematica are unaware of this. Perhaps they rationalize it by saying that they are “correctly” interpreting 9.8E-11 as an arithmetic expression. In a very narrow, technical sense, that would be correct, but I believe that in the real world people expect that numbers printed in scientific notation will be read as numbers in scientific notation. By not doing so, the potential problems are enormous: if I hadn’t drawn my input, I might have never noticed that just a handful of values (in my case, just .2% of them) were getting replaced with vastly larger, essentially unrelated numbers. Imagine the repercussions this could have in any engineering or scientific situation where numbers matter.

This is not idiosyncratic; it’s inexcusable.

can use ImportString with Table or list Argument.

My point is not that you cannot import such notation, it’s that a natural way of doing so mis-interprets your data, and the system never tells you. You’d never have reason to look up alternative ways to read in your data.